Experiments in Computational Criticism #6: "'Golf','Tennis', 'Shuttlecock', and 'Football' in Victorian Scientific Periodicals"

/In a previous experiment, I attempted to see whether within the discourse of Victorian science, “cricket” was associated with nation. I could not find a way to do this successfully. In this experiment, I attempted to see if other sports were associated with nation/empire in Nature, Notices of the Proceedings at the Meetings of the Members of the Royal Institution, Philosophical Magazine, Proceedings at the Royal Society of Edinburgh, Proceedings at the Royal Society of London, the Reports of the BAAS. Again, at the end of this experiment, I did not feel I had strong enough results to argue in the affirmative. But it is still interesting for demonstrating how I approach these experiments.

Methodology: Recreational Reckoning

Experimental Question

I complete my distant readings of texts using packages others have developed in R. R can be a powerful tool for better understanding texts. It isn't always necessary to have a fully testable hypothesis in mind; visualizing texts can be a powerful tool for discovery, especially when you are willing to have fun, exploring the many ways in which one can customize your analysis. On the other hand, because the data can be easily manipulated, one can easily fall into the trap of thinking they observe a feature in the text and then manipulating the text to draw out that feature. Fishing for information that supports a theory one already holds is a real problem in the field labelled by scholars such as those in the Stanford Literary Lab as “computational criticism.”

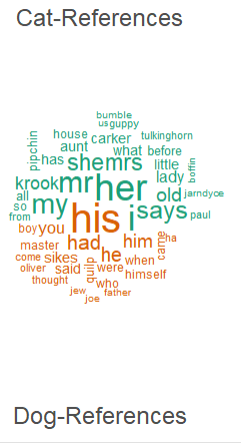

There are several principles that can be used to approach objective experimentation in automated text analysis, as discussed in Justin Grimmer and Brandon M. Stewart's “Text as Data: The Promise and Pitfalls of Automatic Content Analysis Methods for Political Texts” (Political Analysis, 2013). Unlike the social sciences, however, the humanities more generally proceed not through testable and reproducable experiments, but through the development of ideas. Recreational computational criticism–what I call 'Recreational Reckoning'–therefore asks only that you choose one question that your analysis will answer. Questions such as: “Does Dickens's Bleak House include more masculine or feminine pronouns?”; “What topics are central to the Sherlock Holmes canon?”; “Do novel titles become longer or shorter over the course of the nineteenth-century?” New features may become observable while pursuing this analysis. And it is up to the critic to theorize about what this newly visualized feature means. For this project, my question was whether I would find references to the Britain or the British Empire closely associated with references to golf, tennis, shuttlecock, and football.

Why R?

R isn't the only tool one can use for visualizing texts. However, I have found that R computational methods shine when you have texts that are either too long to read quickly, or too many texts to read quickly. They are also useful when you have a specific methodology in mind or prioritize customizability in the data mining or the visualization. For quick visualizations of things like word clouds, Voyant (https://voyant-tools.org) is probably a better.

Downloading R

The first step in using this methodology is obviously to download R. This can be done here (https://www.r-project.org). Users should also download RStudio, an environment which will make running the code easier. (If you are reading this in R/RStudio, then congratulations on already having started!)

Setting Directory

The first step in analyzing your data is choosing a workspace. I recommend creating a new folder for each project. This folder will be your working directory. The working directory in R is generally set via the “setwd()” command. However, here, we're going to be working within R Markdown Files (.Rmd). R Markdowns rely on a package called knitr, which generally requires the R Markdown to be stored in the location of your working directory. So I would recommend creating a new folder, and then downloading these R Markdown Files to the folder where you want to work. For example, you might create a folder called “data” on your computer desktop, in which case your working directory would be something like “C:/Users/Nick/Desktop/data”. You can check that your working directory is indeed in the right place by using the “getwd()” function below.

getwd()

Downloading Packages

The next step is to load in the packages that will be required. My methodology makes use of several packages, depending on what is required for the task. Rather than loading the libraries for each script, I generally find it more useful to install and initialize all the packages I will be using at once.

Packages are initially loaded with the “install.packages()” function. HOWEVER, THIS STEP ONLY HAS TO BE COMPLETED ONCE.

“ggmpap” is a package for visualizing location data.

“ggplot2” is a package for data visualizations. More information can be found here (https://cran.r-project.org/web/packages/ggplot2/index.html).

“pdftools” is a package for reading pdfs. In the past, you had to download a separate pdf reader, and it was a real pain. You, reader, are living in a golden age. Information on the package can be found here (https://cran.r-project.org/web/packages/pdftools/pdftools.pdf).

“plotly” is a package for creating interactive plots.

“quanteda” is a package by Ken Benoit for the quantitative analysis of texts. More information can be found here (https://cran.r-project.org/web/packages/quanteda/quanteda.pdf). quanteda has a great vignette to help you get started (here). There are also exercises available here.

“readr” is a package for reading in certain types of data. More information can be found here (https://cran.r-project.org/web/packages/readr/readr.pdf).

“SnowballC” is a package for stemming words (lemmatizing words, or basically cutting the ends off words as a way of lowering the dimensions of the data. For instance, “working”,“worked”, and “works” all become “work”).

“tm” is a simple package for text mining. An introduction to the package can be found here (https://cran.r-project.org/web/packages/tm/vignettes/tm.pdf).

“tokenizers” is a package which turns a text into a character vector. An introduction to the package can be found here (https://cran.r-project.org/web/packages/tokenizers/vignettes/introduction-to-tokenizers.html).

install.packages("ggmap")

install.packages("ggplot2")

install.packages("pdftools")

install.packages("plotly")

install.packages("quanteda")

install.packages("readr")

install.packages("SnowballC")

install.packages("stm")

install.packages("tm")

install.packages("tokenizers")

Loading Libraries

The next step is to load the libraries for these packages into your environment, which is accomplished with the “library()” function.

library(ggmap)

library(ggplot2)

library(quanteda)

library(pdftools)

library(plotly)

library(readr)

library(SnowballC)

library(stm)

library(tm)

library(tokenizers)

A Note About Citation

Most of the software packages are written by academics. Reliable and easy-to-use software is difficult to make. If you use these packages in your published work: please cite them. In R you can even see how the author would like to be cited (and get a bibtex entry).

citation("ggplot2")

citation("quanteda")

citation("pdftools")

citation("plotly")

citation("readr")

citation("SnowballC")

citation("stm")

citation("tm")

citation("tokenizers")

Uploading Data and setting variables.

I had already created acquired .txt volumes of these texts. So I simply needed to upload the data. There are also various parameters that I might find useful later that need to be defined. The basic methodology is that I am going to construct a script that will go through each word in the .txt files and try to match it with some other words. I chose to look for references to golf, tennis, football, and shuttlecock. However, it is often helpful to make sure you know the words which occur around the referenced term, to provide context. The “conlength” variables provide three different sizes of “windows” for this purpose. For instance, “ProfSportsshortconlength” is set to three, meaning the final dataset will have a column showing the three words to either side of the matched term.

templocation <- paste0(getwd(),"/Documents")

ProfSportslocations2 <- c(paste0(templocation,"/Nature/Volumes"),paste0(templocation,"/Philosophical-Magazine/Volumes"),paste0(templocation,"/Reports-of-the-BAAS/Reports"),paste0(templocation,"/Royal-Institution/Proceedings"),paste0(templocation,"/Royal-Society-of-Edinburgh/Proceedings"), paste0(templocation,"/RSL/Proceedings"))

ProfSportsIndex2 <- c("Nature","Philosophical-Magazine","BAAS","Royal-Institution","RSE","RSL")

ProfSportslongconlength2 <- 250

ProfSportsshortconlength2 <- 3

ProfSportsPOSconlength2 <- 10

ProfSportssearchedtermlist2 <- c("golf","tennis","football","shuttlecock")

ProfSportsoutputlocation2 <- paste0(getwd(),"/WordFlagDataFrames")

ProfSportsWordFlagdfPath2 <- paste0(ProfSportsoutputlocation2,"/","ProfSportsWordFlagdf2.txt")

To create the data frame compiling every reference to a term, run the following script. Be aware that this takes quite a while. So if you already have a dataset that you just need to upload, see below instead.

if(file.exists(ProfSportsoutputlocation2) == FALSE)

ProfSportsstemsearchedtermlist2 <- unique(wordStem(ProfSportssearchedtermlist2)) #lemmatizes the list of terms you want to search for.

ProfSportsWordFlagmat2 <- matrix(,ncol=13,nrow=1)

for (g in 1:length(ProfSportslocations2)) {

tempdocloc <- ProfSportslocations2[g]

files <- list.files(path = tempdocloc, pattern = "txt", full.names = TRUE) #creates vector of txt file names.

for (i in 1:length(files)) {

fileName <- read_file(files[i])

Encoding(fileName) <- "UTF-8" #since tokenize_sentences function requires things to be encoded in UTF-8, need to remove some data.

fileName <- iconv(fileName, "UTF-8", "UTF-8",sub='')

ltoken <- tokenize_words(fileName, lowercase = TRUE, stopwords = NULL, simplify = FALSE)

ltoken <- unlist(ltoken)

stemltoken <- wordStem(ltoken) #this uses the Snowball library to lemmatize the entire text.

textID <- i

for (p in 1:length(ProfSportsstemsearchedtermlist2)) {

ProfSportsstemsearchedterm2 <- ProfSportsstemsearchedtermlist2[p]

for (j in 1:length(stemltoken)) {

if (ProfSportsstemsearchedterm2 == stemltoken[j]) {

if (j <= ProfSportslongconlength2) {longtempvec <- ltoken[(1:(j+ProfSportslongconlength2))]}

if (j > ProfSportslongconlength2) {longtempvec <- ltoken[(j-ProfSportslongconlength2):(j+ProfSportslongconlength2)]}

if (j <= ProfSportsshortconlength2) {shorttempvec <- ltoken[(1:(j+ProfSportsshortconlength2))]}

if (j > ProfSportsshortconlength2) {shorttempvec <- ltoken[(j-ProfSportsshortconlength2):(j+ProfSportsshortconlength2)]}

if (j <= ProfSportsPOSconlength2) {POStempvec <- ltoken[(1:(j+ProfSportsPOSconlength2))]}

if (j > ProfSportsPOSconlength2) {POStempvec <- ltoken[(j-ProfSportsPOSconlength2):(j+ProfSportsPOSconlength2)]}

TempTextName <- gsub(paste0(ProfSportslocations2[g],"/"),"",files[i]) #This grabs just the end of the file path.

TempTextName <- gsub(".txt","",TempTextName) #This removes the .txt from the end of the name.

temprow <- matrix(,ncol=13,nrow=1)

colnames(temprow) <- c("Text", "Text_ID", "ProfSportsstemsearchedterm2","Lemma","Lemma_Perc","KWIC","Total_Lemma","Date","Category","Short_KWIC","POS_KWIC","Current_Date","Corpus")

temprow[1,1] <- TempTextName

temprow[1,2] <- textID

temprow[1,3] <- ProfSportsstemsearchedterm2

temprow[1,4] <- j

temprow[1,5] <- (j/length(stemltoken))*100

temprow[1,6] <- as.character(paste(longtempvec,sep= " ",collapse=" "))

temprow[1,7] <- length(stemltoken)

temprow[1,8] <- strsplit(TempTextName,"_")[[1]][1]

temprow[1,10] <- as.character(paste(shorttempvec,sep= " ",collapse=" "))

temprow[1,11] <- as.character(paste(POStempvec,sep= " ",collapse=" "))

temprow[1,12] <- format(Sys.time(), "%Y-%m-%d")

temprow[1,13] <- ProfSportsIndex2[g]

ProfSportsWordFlagmat2 <- rbind(ProfSportsWordFlagmat2,temprow)

}

}

}

print(paste0(i," out of ",length(files)," in corpus ",g," out of ",length(ProfSportslocations2))) #let's user watch as code runs for long searches

}

}

ProfSportsWordFlagmat2 <- ProfSportsWordFlagmat2[-1,]

ProfSportsWordFlagdf2 <- as.data.frame(ProfSportsWordFlagmat2)

write.table(ProfSportsWordFlagdf2, ProfSportsWordFlagdfPath2)

ProfSportsWordFlagdf2

If you have a previously constructed dataset, you can obviously upload it using a script like this.

ProfSportsWordFlagdf2 <- read.table(ProfSportsWordFlagdfPath2)

#Results

This time I got 341 flags, a much more substantial number. Here is a random sample of what that data looks like.

ProfSportsWordFlagdf2[sample(1:nrow(ProfSportsWordFlagdf2),5),c("Text","ProfSportsstemsearchedterm2","Short_KWIC")]

## Text ProfSportsstemsearchedterm2

## 1 187105-187111_Nature_Vol.04_v00 golf

## 56 188611-188704_Nature_Vol.35_v00 tenni

## 65 188705-188710_Nature_Vol.36_v00 golf

## 36 188311-188404_Nature_Vol.29_v00 golf

## 173 189505-189510_Nature_Vol.52_v00 golf

## Short_KWIC

## 1 the europe i golfe de naples it

## 56 the exception of tennis has little of

## 65 decl on meridian golf in theory as

## 36 is linenalgen des golfes von neapel table

## 173 in general and golf links in particular

With the data in hand, I could now ask some questions about the corpus.

Question 1: Do references to these sports in Victorian Professional Science Publications increase over the course of the century?

This question may be important in our interpretation of the data. It is always interesting to consider the historical arc of references to specific key words.

Script

We can visualize the years each of these references occurred in using the following script. Note that in order to find matches, the search terms were all transformed into a “stemmed” version of the word. “Football” became “footbal,” for instance, so that when searching the text it could flag both references to “football” (stemmed as “footbal”) and “footballs” (also stemmed as “footbal”).

library(ggplot2)

# Visualizing ProfSportsFreqdf BY DATE

p <- ggplot(ProfSportsWordFlagdf2, aes(x = as.numeric(substr(Date,1,4))))

pg <- geom_histogram(binwidth=5)

pl <- p + pg + scale_x_continuous(limits = c(1800, 1900)) + labs(x = "Date", y = "Frequency / Decade", title = "Appearances of Sports with Victorian Professional Science Periodicals")+facet_wrap(~ProfSportsstemsearchedterm2)

(pl)Results

Almost all sports are mentioned more frequently at the end of the century. However, as this is just a simple measure of how many references were found per decade, this may just be reflective of the increase in the number of periodicals later in the century.

Question 2: What terms are most frequenlty associated with each of these sports?

Again, our central question was whether there are certain terms related to nationality or empire that are closely associated with these sports.

Script

I tested this using the script below. I only looked at the top 20 correlated words for each sport. This is the correlation only within the Key Words in Context. So all terms had at least some correlation.

library(tm)

library(tokenizers)

library(SnowballC)

CorrelationMin <- 0.1

ProfSportsstemsearchedtermlist2 <- unique(wordStem(ProfSportssearchedtermlist2)) #lemmatizes the list of terms you want to search for.

datacorpus <- Corpus(VectorSource(ProfSportsWordFlagdf2$KWIC), readerControl=list(reader = readPlain))

data.tdm <- TermDocumentMatrix(datacorpus,control = list(removePunctuation = TRUE, stopwords = FALSE, tolower = TRUE, stemming = TRUE,

removeNumbers = TRUE, bounds = list(global= c(1,Inf))))

for (q in 1:length(ProfSportsstemsearchedtermlist2)) {

print(q)

assocdata <- findAssocs(data.tdm, ProfSportsstemsearchedtermlist2[q], CorrelationMin)

tempdata <- as.data.frame(unlist(assocdata[[1]][1:20]))

keyword <- ProfSportsstemsearchedtermlist2[q]

AssociatedWord <- rownames(tempdata)

correlation <- tempdata[[1]]

tempdata2 <- data.frame(correlation,keyword,AssociatedWord)

p <- ggplot(tempdata2, aes(x = AssociatedWord, y=as.numeric(correlation)))

pg <- geom_bar(stat="identity")

pl <- p + pg + labs(x = "Associated Words", y = "Correlation", title = paste0("Words Associated with '",ProfSportsstemsearchedtermlist2[q], "' in Victorian Professional Science Periodicals"))+coord_flip()

print(pl)

}

Results

The results here did not end up being particularly elucidating about the relationship between these sports and the nation / Empire. Some correlations are what one might expect, such as the connection between “courtyard” and “tennis,” or “football” and “school.” Others are things I wouldn't have expected, but can rationalize, such as the connection between “tennis” and “gamble.” Others may the result of errors introduced in the Optical Character Recognition process.

None of these results showed a correlation between these sports and the nation in the corpus. However, the association of “footbal” with “molecul” did strike me as being interesting. This led me to a productive investigation into the history of comparisons between molecular coarseness and sports balls. This is, I believe, the power of recreational reckoning as a methodology. It often illuminates lines of research one may not have otherwise seen.